|

|

7 years ago | |

|---|---|---|

| .. | ||

| doc | 8 years ago | |

| root | 7 years ago | |

| README.md | 7 years ago | |

| build.sh | 7 years ago | |

| build_android.sh | 7 years ago | |

| entrypoint | 8 years ago | |

README.md

Building PaddlePaddle

Goals

We want to make the building procedures:

- Static, can reproduce easily.

- Generate python

whlpackages that can be widely use cross many distributions. - Build different binaries per release to satisfy different environments:

- Binaries for different CUDA and CUDNN versions, like CUDA 7.5, 8.0, 9.0

- Binaries containing only capi

- Binaries for python with wide unicode support or not.

- Build docker images with PaddlePaddle pre-installed, so that we can run PaddlePaddle applications directly in docker or on Kubernetes clusters.

To achieve this, we created a repo: https://github.com/PaddlePaddle/buildtools

which gives several docker images that are manylinux1 sufficient. Then we

can build PaddlePaddle using these images to generate corresponding whl

binaries.

Run The Build

Build Environments

The pre-built build environment images are:

| Image | Tag |

|---|---|

| paddlepaddle/paddle_manylinux_devel | cuda7.5_cudnn5 |

| paddlepaddle/paddle_manylinux_devel | cuda8.0_cudnn5 |

| paddlepaddle/paddle_manylinux_devel | cuda7.5_cudnn7 |

| paddlepaddle/paddle_manylinux_devel | cuda9.0_cudnn7 |

Start Build

Choose one docker image that suit your environment and run the following command to start a build:

git clone https://github.com/PaddlePaddle/Paddle.git

cd Paddle

docker run --rm -v $PWD:/paddle -e "WITH_GPU=OFF" -e "WITH_AVX=ON" -e "WITH_TESTING=OFF" -e "RUN_TEST=OFF" -e "PYTHON_ABI=cp27-cp27mu" paddlepaddle/paddle_manylinux_devel /paddle/paddle/scripts/docker/build.sh

After the build finishes, you can get output whl package under

build/python/dist.

This command mounts the source directory on the host into /paddle in the container, then run the build script /paddle/paddle/scripts/docker/build.sh

in the container. When it writes to /paddle/build in the container, it writes to $PWD/build on the host indeed.

Build Options

Users can specify the following Docker build arguments with either "ON" or "OFF" value:

| Option | Default | Description |

|---|---|---|

WITH_GPU |

OFF | Generates NVIDIA CUDA GPU code and relies on CUDA libraries. |

WITH_AVX |

OFF | Set to "ON" to enable AVX support. |

WITH_TESTING |

ON | Build unit tests binaries. |

WITH_MKL |

ON | Build with Intel® MKL and Intel® MKL-DNN support. |

WITH_GOLANG |

ON | Build fault-tolerant parameter server written in go. |

WITH_SWIG_PY |

ON | Build with SWIG python API support. |

WITH_C_API |

OFF | Build capi libraries for inference. |

WITH_PYTHON |

ON | Build with python support. Turn this off if build is only for capi. |

WITH_STYLE_CHECK |

ON | Check the code style when building. |

PYTHON_ABI |

"" | Build for different python ABI support, can be cp27-cp27m or cp27-cp27mu |

RUN_TEST |

OFF | Run unit test immediently after the build. |

WITH_DOC |

OFF | Build docs after build binaries. |

WOBOQ |

OFF | Generate WOBOQ code viewer under build/woboq_out |

Docker Images

You can get the latest PaddlePaddle docker images by

docker pull paddlepaddle/paddle:<version> or build one by yourself.

Official Docker Releases

Official docker images at

here,

you can choose either latest or images with a release tag like 0.10.0,

Currently available tags are:

| Tag | Description |

|---|---|

| latest | latest CPU only image |

| latest-gpu | latest binary with GPU support |

| 0.10.0 | release 0.10.0 CPU only binary image |

| 0.10.0-gpu | release 0.10.0 with GPU support |

Build Your Own Image

Build PaddlePaddle docker images are quite simple since PaddlePaddle can

be installed by just running pip install. A sample Dockerfile is:

FROM nvidia/cuda:7.5-cudnn5-runtime-centos6

RUN yum install -y centos-release-SCL

RUN yum install -y python27

# This whl package is generated by previous build steps.

ADD python/dist/paddlepaddle-0.10.0-cp27-cp27mu-linux_x86_64.whl /

RUN pip install /paddlepaddle-0.10.0-cp27-cp27mu-linux_x86_64.whl && rm -f /*.whl

Then build the image by running docker build -t [REPO]/paddle:[TAG] . under

the directory containing your own Dockerfile.

- NOTE: note that you can choose different base images for your environment, you can find all the versions here.

Use Docker Images

Suppose that you have written an application program train.py using

PaddlePaddle, we can test and run it using docker:

docker run --rm -it -v $PWD:/work paddlepaddle/paddle /work/a.py

But this works only if all dependencies of train.py are in the production image. If this is not the case, we need to build a new Docker image from the production image and with more dependencies installs.

Run PaddlePaddle Book In Docker

Our book repo also provide a docker image to start a jupiter notebook inside docker so that you can run this book using docker:

docker run -d -p 8888:8888 paddlepaddle/book

Please refer to https://github.com/paddlepaddle/book if you want to build this docker image by your self.

Run Distributed Applications

In our API design doc, we proposed an API that starts a distributed training job on a cluster. This API need to build a PaddlePaddle application into a Docker image as above and calls kubectl to run it on the cluster. This API might need to generate a Dockerfile look like above and call docker build.

Of course, we can manually build an application image and launch the job using the kubectl tool:

docker build -f some/Dockerfile -t myapp .

docker tag myapp me/myapp

docker push

kubectl ...

Docker Images for Developers

We have a special docker image for developers:

paddlepaddle/paddle:<version>-dev. This image is also generated from

https://github.com/PaddlePaddle/buildtools

This a development image contains only the development tools and standardizes the building procedure. Users include:

- developers -- no longer need to install development tools on the host, and can build their current work on the host (development computer).

- release engineers -- use this to build the official release from certain branch/tag on Github.com.

- document writers / Website developers -- Our documents are in the source repo in the form of .md/.rst files and comments in source code. We need tools to extract the information, typeset, and generate Web pages.

Of course, developers can install building tools on their development computers. But different versions of PaddlePaddle might require different set or version of building tools. Also, it makes collaborative debugging easier if all developers use a unified development environment.

The development image contains the following tools:

- gcc/clang

- nvcc

- Python

- sphinx

- woboq

- sshd

Many developers work on a remote computer with GPU; they could ssh into the computer and docker exec into the development container. However, running sshd in the container allows developers to ssh into the container directly.

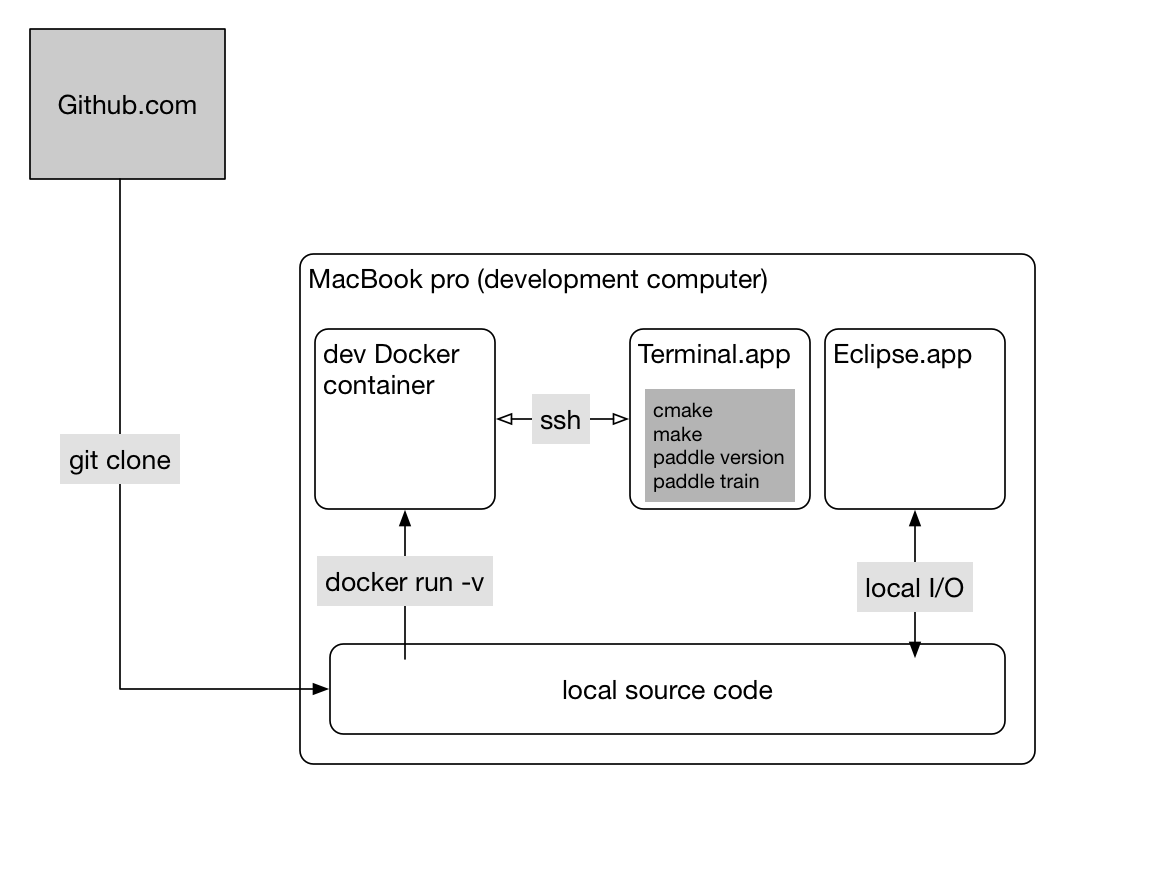

Development Workflow

Here we describe how the workflow goes on. We start from considering our daily development environment.

Developers work on a computer, which is usually a laptop or desktop:

or, they might rely on a more sophisticated box (like with GPUs):

A principle here is that source code lies on the development computer (host) so that editors like Eclipse can parse the source code to support auto-completion.

Reading source code with woboq codebrowser

For developers who are interested in the C++ source code, please use -e "WOBOQ=ON" to enable the building of C++ source code into HTML pages using Woboq codebrowser.

- The following command builds PaddlePaddle, generates HTML pages from C++ source code, and writes HTML pages into

$HOME/woboq_outon the host:

docker run -v $PWD:/paddle -v $HOME/woboq_out:/woboq_out -e "WITH_GPU=OFF" -e "WITH_AVX=ON" -e "WITH_TESTING=ON" -e "WOBOQ=ON" paddlepaddle/paddle:latest-dev

- You can open the generated HTML files in your Web browser. Or, if you want to run a Nginx container to serve them for a wider audience, you can run:

docker run -v $HOME/woboq_out:/usr/share/nginx/html -d -p 8080:80 nginx